Pyspark Scenarios 11 : how to handle double delimiter or multi delimiters in pyspark #pyspark

Pyspark Scenarios 23 : How do I select a column name with spaces in PySpark? #pyspark #databricksПодробнее

Pyspark Scenarios 22 : How To create data files based on the number of rows in PySpark #pysparkПодробнее

Pyspark Scenarios 21 : Dynamically processing complex json file in pyspark #complexjson #databricksПодробнее

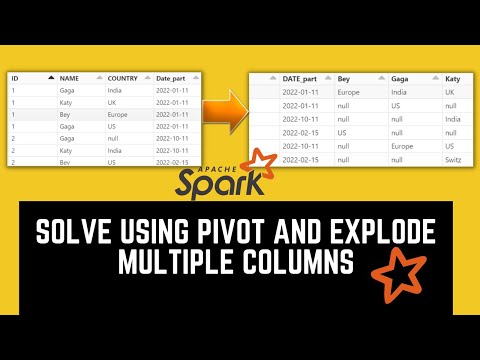

8. Solve Using Pivot and Explode Multiple columns |Top 10 PySpark Scenario-Based Interview Question|Подробнее

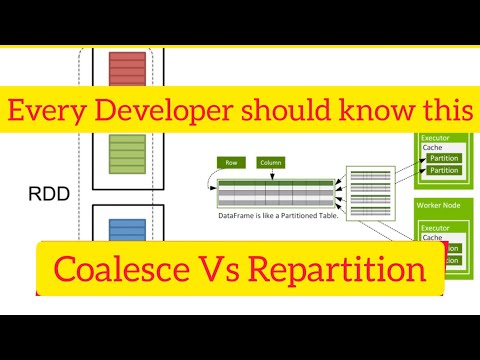

Pyspark Scenarios 20 : difference between coalesce and repartition in pyspark #coalesce #repartitionПодробнее

Pyspark Scenarios 19 : difference between #OrderBy #Sort and #sortWithinPartitions TransformationsПодробнее

Pyspark Scenarios 18 : How to Handle Bad Data in pyspark dataframe using pyspark schema #pysparkПодробнее

7. Solve using REGEXP_REPLACE | Top 10 PySpark Scenario Based Interview Question|Подробнее

Pyspark Scenarios 17 : How to handle duplicate column errors in delta table #pyspark #deltalake #sqlПодробнее

Pyspark Scenarios 16: Convert pyspark string to date format issue dd-mm-yy old format #pysparkПодробнее

6. How to handle multi delimiters| Top 10 PySpark Scenario Based Interview Question|Подробнее

Pyspark Scenarios 14 : How to implement Multiprocessing in Azure Databricks - #pyspark #databricksПодробнее

Pyspark Scenarios 13 : how to handle complex json data file in pyspark #pyspark #databricksПодробнее

Pyspark Scenarios 12 : how to get 53 week number years in pyspark extract 53rd week number in sparkПодробнее

Pyspark Scenarios 10:Why we should not use crc32 for Surrogate Keys Generation? #Pyspark #databricksПодробнее

Pyspark Scenarios 9 : How to get Individual column wise null records count #pyspark #databricksПодробнее

Pyspark Scenarios 8: How to add Sequence generated surrogate key as a column in dataframe. #pysparkПодробнее

Pyspark Scenarios 6 How to Get no of rows from each file in pyspark dataframe #pyspark #databricksПодробнее

Pyspark Scenarios 7 : how to get no of rows at each partition in pyspark dataframe #pyspark #azureПодробнее