6. How to handle multi delimiters| Top 10 PySpark Scenario Based Interview Question|

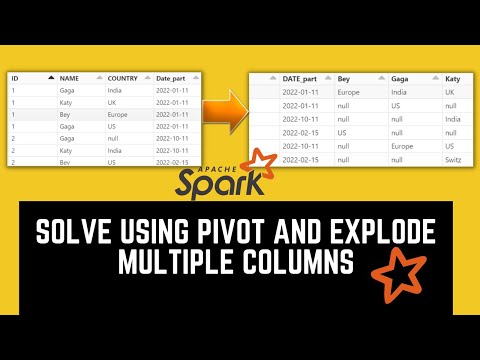

8. Solve Using Pivot and Explode Multiple columns |Top 10 PySpark Scenario-Based Interview Question|Подробнее

7. Solve using REGEXP_REPLACE | Top 10 PySpark Scenario Based Interview Question|Подробнее

Pyspark Scenarios 11 : how to handle double delimiter or multi delimiters in pyspark #pysparkПодробнее

Spark Interview Question | Scenario Based Question | Multi Delimiter | LearntoSparkПодробнее

Spark Interview Question | Scenario Based | Multi Delimiter | Using Spark with Scala | LearntoSparkПодробнее

How to copy data from REST API multiple page response using ADF | Azure Data Factory Real TimeПодробнее

Understanding how to Optimize PySpark Job | Cache | Broadcast Join | Shuffle Hash Join #interviewПодробнее

Top 15 Spark Interview Questions in less than 15 minutes Part-2 #bigdata #pyspark #interviewПодробнее

10 recently asked Pyspark Interview Questions | Big Data InterviewПодробнее

Some Techniques to Optimize Pyspark Job | Pyspark Interview Question| Data EngineerПодробнее

2. Explode columns using PySpark | Top 10 PySpark Scenario Based Interview Question|Подробнее

Pyspark Scenarios 15 : how to take table ddl backup in databricks #databricks #pyspark #azureПодробнее