Operationalizing Ray Serve on Kubernetes

Advanced Model Serving Techniques with Ray on Kubernetes - Andrew Sy Kim & Kai-Hsun ChenПодробнее

In Under 30 Minutes, Build A Scalable inference Service Using Ray Serve and MinikubeПодробнее

KubeRay: A Ray cluster management solution on KubernetesПодробнее

Introduction to Distributed ML Workloads with Ray on Kubernetes - Mofi Rahman & Abdel SghiouarПодробнее

Deploying LLMs on Kubernetes: Can KubeRay Help?Подробнее

Distributed training with Ray on Kubernetes at LyftПодробнее

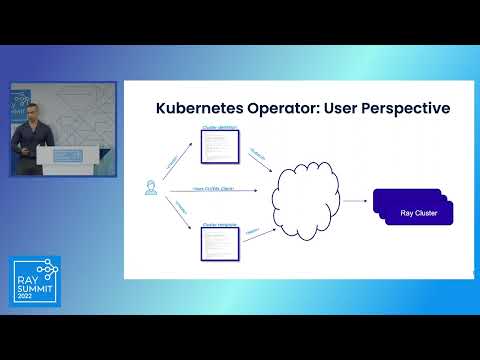

KubeRay - A Kubernetes Ray clustering solutionПодробнее

Trying the Ray Project on Kubernetes (2nd attempt)Подробнее

apply() Conference 2022 | Bring Your Models to Production with Ray ServeПодробнее

Deploying Ray Cluster on an Air-Gapped Kubernetes Cluster with Tight Security Control: Challenges anПодробнее

The Different Shades of using KubeRay with KubernetesПодробнее

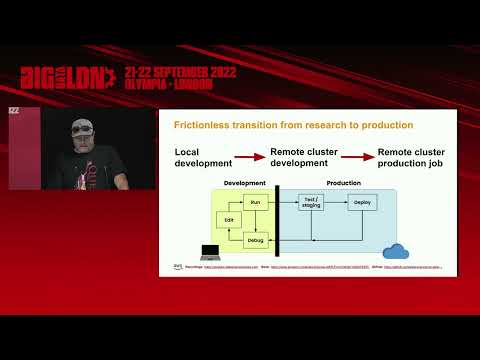

A cross-platform, cross-tool Ray on Kubernetes deploymentПодробнее

Deploying Many Models Efficiently with Ray ServeПодробнее

Scaling AI & Machine Learning Workloads With Ray on AWS, Kubernetes, & BERTПодробнее

Ray Serve: Tutorial for Building Real Time Inference PipelinesПодробнее

Should you run Kubernetes at home? @TechnoTim says...Подробнее

The open source AI compute tech stack: Kubernetes + Ray + PyTorch + vLLMПодробнее

Enabling Cost-Efficient LLM Serving with Ray ServeПодробнее

Running Ray on Kubernetes with KubeRayПодробнее