Deploying Many Models Efficiently with Ray Serve

How to Deploy Ollama on Kubernetes | AI Model Serving on k8sПодробнее

Efficient LLM Deployment: A Unified Approach with Ray, VLLM, and Kubernetes - Lily (Xiaoxuan) LiuПодробнее

deploying many models efficiently with ray serveПодробнее

Klaviyo's Journey to Robust Model Serving with Ray Serve | Ray Summit 2024Подробнее

Enabling Cost-Efficient LLM Serving with Ray ServeПодробнее

Building Production AI Applications with Ray ServeПодробнее

Ray (Episode 4): Deploying 7B GPT using RayПодробнее

Introducing Ray Aviary | 🦜🔍 Open Source Multi-LLM ServingПодробнее

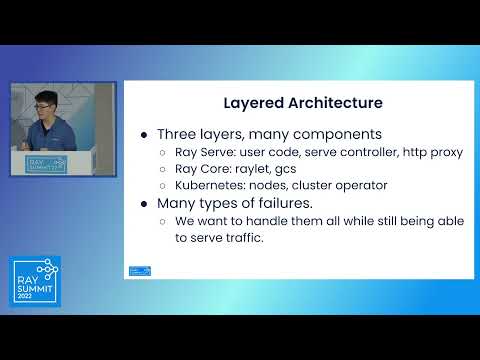

Highly available architectures for online serving in RayПодробнее

Multi-model composition with Ray Serve deployment graphsПодробнее

Leveraging the Possibilities of Ray ServeПодробнее

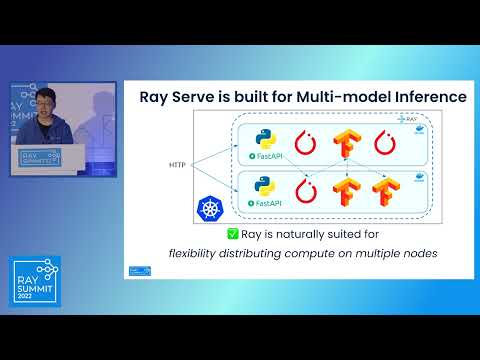

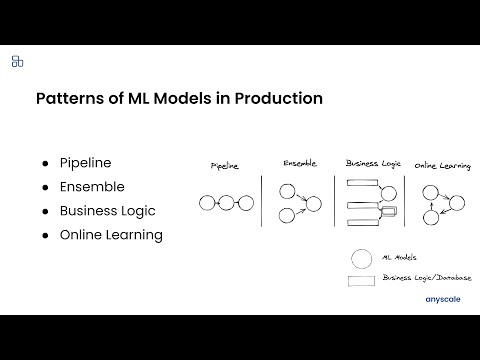

Ray Serve: Patterns of ML Models in ProductionПодробнее

Introducing Ray Serve: Scalable and Programmable ML Serving Framework - Simon Mo, AnyscaleПодробнее