How to dynamically change PYTHONPATH in pyspark app

How to dynamically change PYTHONPATH in pyspark appПодробнее

How to dynamically change PYTHONPATH in pyspark appПодробнее

Renaming Columns dynamically in a Dataframe in PySpark | Without hardcoding| Realtime scenarioПодробнее

Adding Columns dynamically to a Dataframe in PySpark | Without hardcoding | Realtime scenarioПодробнее

How to add any value dynamically to all the columns in pyspark dataframeПодробнее

Data Validation with Pyspark || Rename columns Dynamically ||Real Time ScenarioПодробнее

Dynamic Partition Pruning | Spark Performance TuningПодробнее

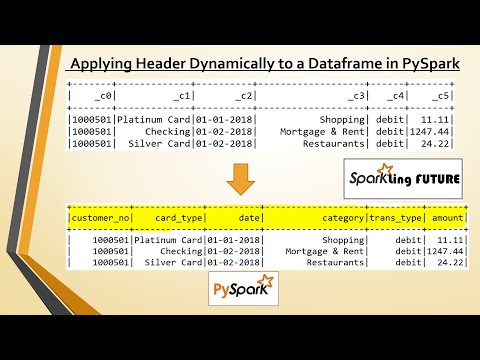

Applying headers dynamically to a Dataframe in PySpark | Without hardcoding schemaПодробнее

How to Set Your Own Schema, Change Data Types & Use Aliases in PySpark Guide 2025Подробнее

Create Dynamic Dataframes in PySparkПодробнее

Pyspark Scenarios 21 : Dynamically processing complex json file in pyspark #complexjson #databricksПодробнее

Real-time Big Data Project Common Scenarios | How are Duplicates handled in PySpark #interviewПодробнее

Pyspark Scenarios 22 : How To create data files based on the number of rows in PySpark #pysparkПодробнее

How to Standardize or Normalize Data with PySpark ❌Work with Continuous Features ❌PySpark TutorialПодробнее

How much does a DATA ENGINEER make?Подробнее

PySpark Tutorial | Pyspark course | Setting Python, Java, Pyspark paths using PowerShellПодробнее

Parallel table ingestion with a Spark Notebook (PySpark + Threading)Подробнее

Getting The Best Performance With PySparkПодробнее