optimizers comparison: adam, nesterov, spsa, momentum and gradient descent.

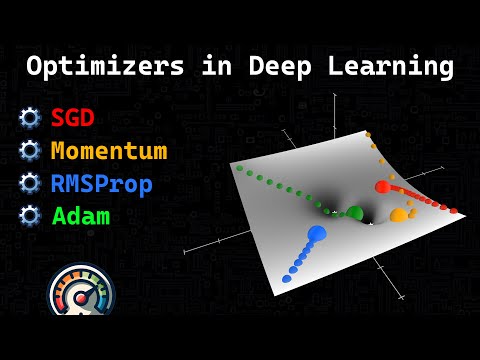

Optimization for Deep Learning (Momentum, RMSprop, AdaGrad, Adam)Подробнее

Optimizers - EXPLAINED!Подробнее

MOMENTUM Gradient Descent (in 3 minutes)Подробнее

Who's Adam and What's He Optimizing? | Deep Dive into Optimizers for Machine Learning!Подробнее

Deep Learning-All Optimizers In One Video-SGD with Momentum,Adagrad,Adadelta,RMSprop,Adam OptimizersПодробнее

Top Optimizers for Neural NetworksПодробнее

Adam Optimizer Explained in Detail with Animations | Optimizers in Deep Learning Part 5Подробнее

STOCHASTIC Gradient Descent (in 3 minutes)Подробнее

Lecture 4.3 OptimizersПодробнее

Optimizers in Neural Networks | Gradient Descent with Momentum | NAG | Deep Learning basicsПодробнее

Machine Learning Optimizers (BEST VISUALIZATION)Подробнее

Gradient descent with momentumПодробнее

Gradient Descent in 3 minutesПодробнее

Adam Optimization Algorithm (C2W2L08)Подробнее

Adam Optimizer Explained in Detail | Deep LearningПодробнее

CS 152 NN—8: Optimizers—Nesterov with momentumПодробнее

AdamW Optimizer Explained #datascience #machinelearning #deeplearning #optimizationПодробнее

(Nadam) ADAM algorithm with Nesterov momentum - Gradient Descent : An ADAM algorithm improvementПодробнее