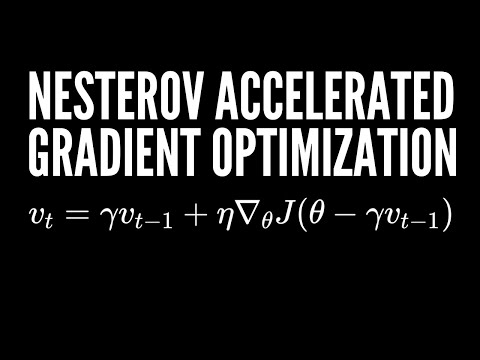

M2 Part 03 | Nesterov Accelerated Gradient | RMS prop | WORKING | EQUATIONS |IMPORTANT TOPICS IN 👇

ODE of Nesterov's accelerated gradientПодробнее

Nesterov Accelerated Gradient (NAG) Explained in Detail | Animations | Optimizers in Deep LearningПодробнее

Nesterov Accelerated Gradient NAG OptimizerПодробнее

Nesterov's Accelerated GradientПодробнее

Part 3. Convergence of gradient descent and Nesterov's accelerated gradient using ODEПодробнее

Optimization for Deep Learning (Momentum, RMSprop, AdaGrad, Adam)Подробнее

Nesterov's Accelerated Gradient Method - Part 2Подробнее

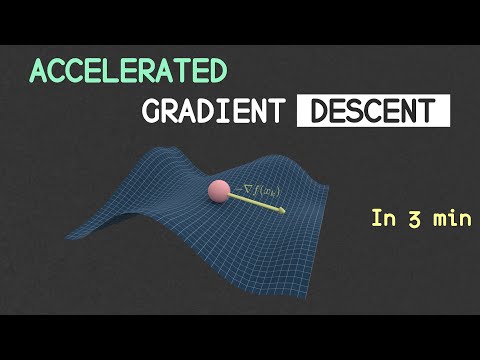

MOMENTUM Gradient Descent (in 3 minutes)Подробнее

Nesterov Momentum update for Gradient Descent algorithmsПодробнее

Nesterov Accelarated Gradient DescentПодробнее

Deep Learning(CS7015): Lec 5.5 Nesterov Accelerated Gradient DescentПодробнее

Nesterov's Accelerated Gradient Method - Part 1Подробнее

GRADIENT DESCENT ALGORITHM IN 15sПодробнее

Adadelta, RMSprop and Adam Optimizers Deep learning part-03Подробнее

What is GRADIENT DESCENT?Подробнее

On momentum methods and acceleration in stochastic optimizationПодробнее

Optimization in machine learning (Part 03) AdaGrad - RMSProp - AdaDelta - AdamПодробнее

NN - 26 - SGD Variants - Momentum, NAG, RMSprop, Adam, AdaMax, Nadam (NumPy Code)Подробнее