How to get count of records in each files present in a folder using pyspark

Pyspark Scenarios 6 How to Get no of rows from each file in pyspark dataframe #pyspark #databricksПодробнее

How to get the count of data from each file in Databricks|PySpark Tutorial |Data EngineeringПодробнее

Pyspark Scenarios 5 : how read all files from nested folder in pySpark dataframe #pyspark #sparkПодробнее

How to get row count from each partition file | PySpark Real Time ScenarioПодробнее

How to Get the Count of Null Values Present in Each Column of dataframe using PySparkПодробнее

70. Databricks| Pyspark| Input_File_Name: Identify Input File Name of Corrupt RecordПодробнее

File Size Calculation using pysparkПодробнее

Pyspark Scenarios 9 : How to get Individual column wise null records count #pyspark #databricksПодробнее

113. Databricks | PySpark| Spark Reader: Skip Specific Range of Records While Reading CSV FileПодробнее

Count Rows In A Dataframe | PySpark Count() Function |Basics of Apache SparkПодробнее

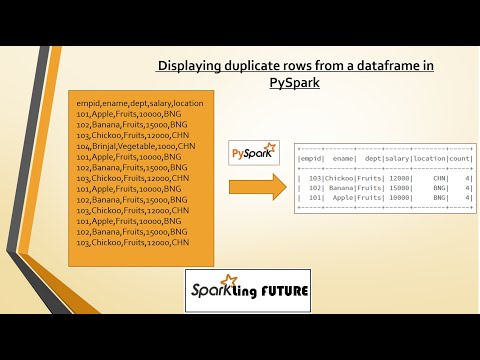

Displaying duplicate records in PySpark | Using GroupBy | Realtime ScenarioПодробнее

How to Get Record Count of All CSV and Text Files from Folder and Subfolders in SSIS PackageПодробнее

41. Count Rows In A Dataframe | PySpark Count() FunctionПодробнее

PySpark Tutorial | Resilient Distributed Datasets(RDD) | PySpark Word Count ExampleПодробнее

33. How to access and count files and directories in folder in python.Подробнее

Python Mini Programs in VS Code: Top 10 Most Frequent Words in a Text File 📄🐍| Dictionary & Sorting!Подробнее

How to Load All CSV Files in a Folder with pysparkПодробнее

select all within folders gives file countПодробнее

6. How to Write Dataframe as single file with specific name in PySpark | #spark#pyspark#databricksПодробнее