How to Filter a Nested Array Column in Spark SQL

How to Query Nested JSON Columns in Spark SQLПодробнее

Spark SQL higher order functionsПодробнее

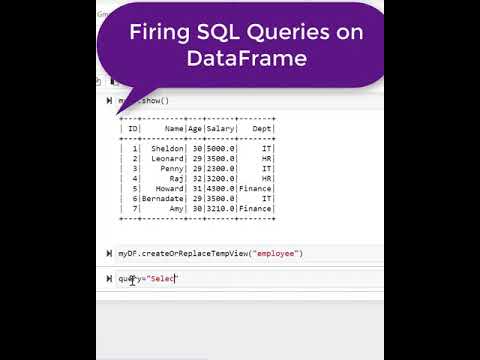

Firing SQL Queries on DataFrame. #shorts #Pyspark #hadoopПодробнее

Spark Basics: Filter Empty/Non Empty Arrays In DataframesПодробнее

How to filter Spark dataframe by array column containing any of the values of some other datafra...Подробнее

How to Move a Spark DataFrame's Columns to a Nested ColumnПодробнее

How to Filter Nested Columns in a PySpark DataFrameПодробнее

How to use PySpark Where Filter Function ?Подробнее

12. Explode nested array into rows | Interview Questions | PySpark PART 12Подробнее

Materialized Column: An Efficient Way to Optimize Queries on Nested ColumnsПодробнее

54. How to filter records using array_contains in pyspark | #pyspark PART 54Подробнее

PySpark Examples - How to handle Array type column in spark data frame - Spark SQLПодробнее

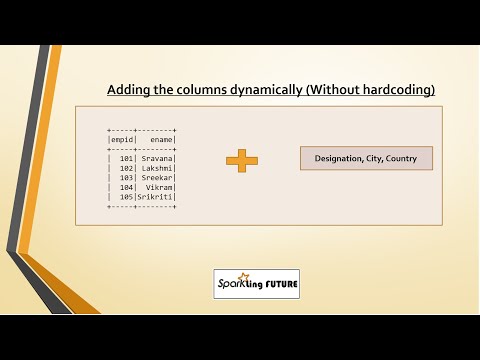

Adding Columns Dynamically to a DataFrame in Spark SQL using ScalaПодробнее

How to apply Filter in spark dataframe based on other dataframe column|Pyspark questions and answersПодробнее

How to Get All Combinations of an Array Column in Spark Using Built-in FunctionsПодробнее

Transforming Arrays and Maps in PySpark : Advanced Functions_ transform(), filter(), zip_with()Подробнее

How to Extract Nested JSON Values as Columns in Apache Spark using ScalaПодробнее

Spark SQL - Basic Transformations - Filtering DataПодробнее

Filtering Rows in Spark DataFrames with Complex StructuresПодробнее