Deploy ML Model with KServe to Production | MLOps

How to Create a Custom Serving Runtime in KServe ModelMesh to S... Rafael Vasquez & Christian KadnerПодробнее

Custom Code Deployment with KServe and Seldon CoreПодробнее

Continuous Machine Learning Deployment with ZenML and KServe: ZenML Meet The Community (03/08/2022)Подробнее

Deploying ML Models in Production: An OverviewПодробнее

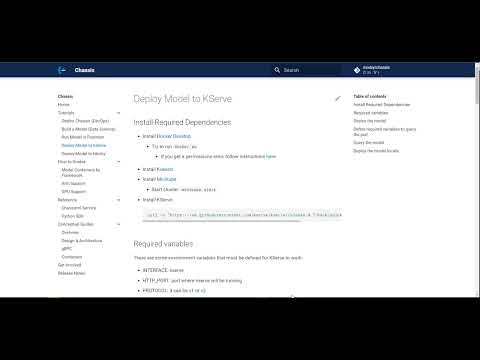

Open-source Chassis.ml - Deploy Model to KServeПодробнее

Serving Machine Learning Models at Scale Using KServe - Yuzhui Liu, BloombergПодробнее

KServe (Kubeflow KFServing) Live Coding Session // Theofilos Papapanagiotou // MLOps Meetup #83Подробнее

Serving Machine Learning Models at Scale Using KServe - Animesh Singh, IBM - KubeCon North AmericaПодробнее