Deploy and Use any Open Source LLMs using RunPod

Better Than RunPod? RunC.AI LLM Deploy and InferenceПодробнее

Deploy and use any open source llms using runpodПодробнее

How to Self-Host DeepSeek on RunPod in 10 MinutesПодробнее

Deploying Quantized Llama 3.2 Using vLLMПодробнее

Deploy Molmo-7B an Open-Source multimodal LLM on RunpodПодробнее

Deploying a multi modal LLM with Pixtral on a VPS on Runpod fastПодробнее

Deploying open source LLM models 🚀 (serverless)Подробнее

Run Llama 3.1 405B with Ollama on RunPod (Local and Open Web UI)Подробнее

Deploy LLMs using Serverless vLLM on RunPod in 5 MinutesПодробнее

Deploying Open Source LLM Model on RunPod Cloud with LangChain TutorialПодробнее

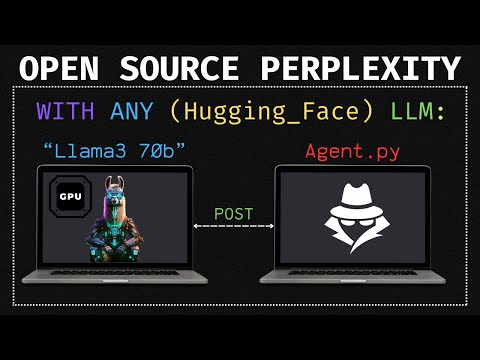

Build Open Source "Perplexity" agent with Llama3 70b & Runpod - Works with Any Hugging Face LLM!Подробнее

How to get LLaMa 3 UNCENSORED with Runpod & vLLMПодробнее

How to Run Any LLM using Cloud GPUs and Ollama with Runpod.ioПодробнее

Silly Tavern: Use Any HuggingFace Models with RUNPOD.IO for 3$/hrПодробнее

How to run Miqu in 5 minutes with vLLM, Runpod, and no code - Mistral leakПодробнее

LLM Projects - How to use Open Source LLMs with AutoGen – Deploying Llama 2 70B TutorialПодробнее

Host your own LLM in 5 minutes on runpod, and setup APi endpoint for it.Подробнее

Get Started Using Open Source LLMS: Mistral/OpenHermesПодробнее

EASIEST Way to Custom Fine-Tune Llama 2 on RunPodПодробнее