Demo: Efficient FPGA-based LLM Inference Servers

Positron Demo Live AI LLM Versus GPUs Using Altera Agilex 7 M-Series FPGAsПодробнее

Demo: Sketch Recognition AI on Agilex™ 7 SoC FPGA | ResNet50 & AI InferenceПодробнее

BrainChip Demonstration of LLM Inference On an FPGA at the Edge using the TENNs FrameworkПодробнее

Demo: Agilex™ 3 FPGA: High-Performance, AI-Optimized, and Secure | Embedded Systems & HPCПодробнее

FPGA AI Suite Software Emulation Demo | Run AI Inference Without Hardware Using OpenVINO™Подробнее

FPGA Transmitter Demo (Home Lab)Подробнее

MicroRec: Efficient Recommendation Inference on FPGAsПодробнее

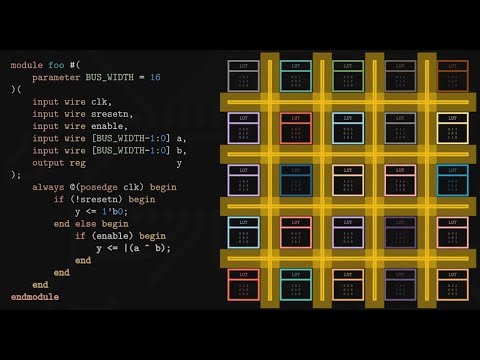

What's an FPGA?Подробнее

Unlocking the Full Potential of FPGAs for Real-Time ML Inference, by Salvador Alvarez, AchronixПодробнее

Nvidia CUDA in 100 SecondsПодробнее

[FPGA 2022] An FPGA-based RNN-T Inference Accelerator with PIM-HBMПодробнее

![[FPGA 2022] An FPGA-based RNN-T Inference Accelerator with PIM-HBM](https://img.youtube.com/vi/c9jkd9QEA6E/0.jpg)

Intel Demonstration of Vision Inference Acceleration with FPGAsПодробнее

Let's have a quick look at an FPGA-SoCПодробнее

Demo | LLM Inference on Intel® Data Center GPU Flex Series | Intel SoftwareПодробнее